Embeddings & Representation Learning in ML

ml

As we all know that while solving machine learning problems, we come across a lot of different data types which we need to handle. One of the most common among them is categorical data.

These are data types that are not numbers but categories.

Problem with Categorical Data

Most of the machine learning algorithms work on numerical data as it is easier for the machine to interpret than any categorical data. So, in order to make the predictions more accurate and robust, we convert the categorical data to numeric data using different methods like:

- Ordinal Encoding

- One Hot Encoding

Ordinal encoding works best for data that has an order to it but in real-world data science projects, data without any order to it is more common. So, for handling those we use One Hot Encoding.

Problem with One Hot Encoding

One hot Encoding creates as many features in our dataset as there is the number of unique categories in respective categorical columns or attributes of the dataset.

So, when we have a large number of possible categories (like profession, species, zip-code), then one hot encoding will result in a large number of input features.

This may slow down the training or degrade the performance of the model. What to do then?

Embeddings & Representation Learning

If a categorical attribute has large number of possible categories then one hot encoding will result in large number of input features. This may slow down training or degrade performance. If this happens the you many want to replace the categorical attribute with a numerical feature related to the category.

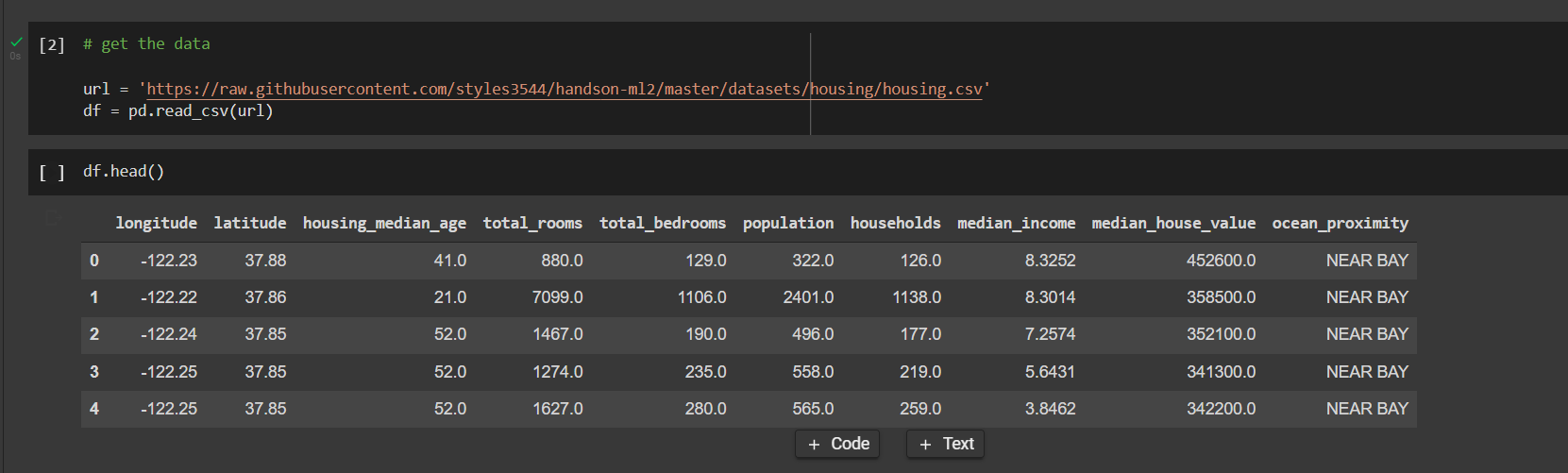

For Example: “ocean_proximity” in the below dataset can be replaced with a new column “distance_from_ocean”

Similarly, a zip-code can be replaced with any kind of country’s numerical representation like GDP or it’s population. This way, you can replace each category with a learnable, low dimensional vector called an embedding. Each categories representation would be learned during training. This is an example of representation learning.

subscribe via RSS